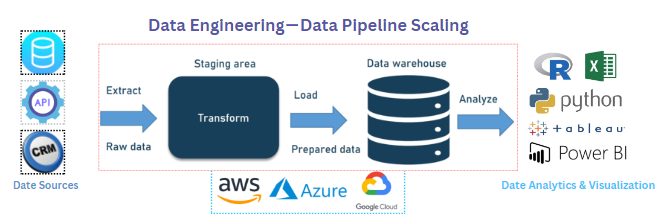

If you work as a data engineer, data analytics, or data scientist in an organization that needs you on a project and are using a pretty standard ELT architecture to extract data from several sources into on-premise or cloud-based systems, this is a good fit.

Data Curiosity: Data curiosity is essential for a successful company that values data before you begin creating your data pipeline. It’s a constantly changing part of data culture that pushes you to seek out new or current data, challenge it, and utilize it to make more accurate decisions about data patterns within source systems, such as —

- How much data in the DB?

- How much in the API?

- Are queries to the API deterministic?

- Do they have cases of combinatorial explosion, or is it fairly straightforward?

You could clarify the data curiosity by assuming that the data in the database consists of customer-level aggregates at multiple dimensions, which are already quite large in Snowflake/On-premise databases or cloud based databases and will grow linearly with customer growth. The API access consists of both point and range queries; paginated responses for range queries are required. Moving this data to an RDBMS at regular periods is an option, but it adds complexity in terms of frequency of loads, database pressure, and adding another layer for us to reconcile, etc.

To read the full story, please reach out to my Medium article here.

No comments:

Post a Comment