To gain profit and actionable insights from day-to-day business data, organizations increasingly rely on modern data architectures. One such influential framework is the Medallion Architecture, which has become a key design pattern as data platforms continue to evolve.

The medallion 3 layer architecture was essentially devised for analytical purposes on structured data, not for supporting more operational needs. So, to get there, we’ll need some additional concepts.

The design itself as coming from Bill Inmon’s “The Corporate Information Factory”. It was published before the name medallion became popular, and definitely before much of the technology we mentioned was available, so parts of it were purely theoretical — but he did describe this concept in a way and even mentioned the kinds of data and features that would be needed for it to work. We also borrow a lot of what we see from our experience with Databricks — that platform provides many of these features out of the box, and integrates well with others.

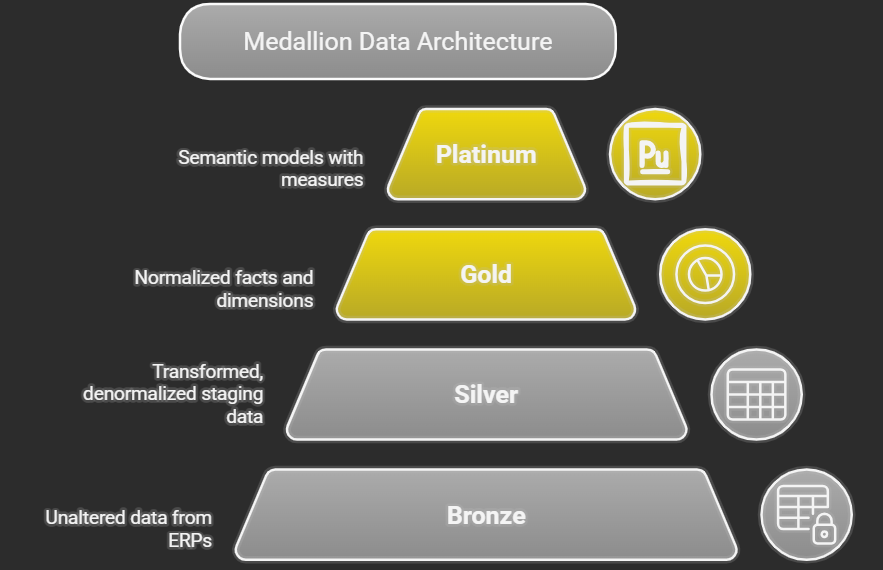

This architecture plays a crucial role in the lakehouse paradigm, a modern approach that blends the flexibility of data lakes with the reliability and performance of data warehouses. By structuring data into layered zones — typically Bronze (raw data), Silver (cleaned and enriched data), and Gold (business-ready data) — the Medallion Architecture enables efficient data processing, quality improvement, and analytics-driven decision-making.

The Data Medallion architecture is a common approach to organizing and processing data in a data lake or data warehouse, providing a structured framework for data ingestion, transformation, and consumption. Each layer represents a stage in the data’s journey, with increasing levels of refinement, quality, and business readiness.

There are no hard and fast rules governing where these borders/ data layers occur. For example, some people may type and label things consistently in bronze, whereas others may do it in silver. Some people may consider everything with modeling gold, whilst others may only consider final reports or mart gold. As a general rule, you want to have at least:

👉A clear area where data coming from the sources lands with as little changes as possible. That serves as some subset of a basis for downstream transformation, a storage to replay history, evidence for reconciliation if you need it, decoupling from the sources. This is called bronze in a medallion architecture

👉A clear area where you have data that has been validated for quality and consistency across sources, with as little detail as possible lost. This serves as the source of data for more comolex/aggregated reports/analyses, feeds your ML models (if you have them), offers consistency across different domains for general business rules for derivation data (when it applies across the board and at a granular level) and is overall the “single source of truth” for all your data platform. This is called Silver in the medallion architecture

👉A number of schemas serving specific business applications/domains/data products, with heavy transformation (often aggregation) to fit the specific requirements of each, also optimized for performance for specific access patterns and tools. This is what most users will ever see. In the medallion architecture, this is called gold.

The 3 layers above are pretty much the standard and have been so even before the name medallion architecture came along. I remember calling them staging/ODS, DW and Data Marts over 25 years ago. As we are moving to new era, these layers are getting more attractive naming conventions.

Bronze Layer (Raw Data) - The Bronze layer, also known as the raw or landing zone, is the initial entry point for data into the data lake. It serves as a repository for data in its original, unprocessed format. The primary goal of the Bronze layer is to ingest data quickly and reliably, preserving its fidelity for auditing and potential reprocessing.

Key Characteristics:

- Raw and Untouched: Data is stored in its original format, as received from the source systems. No transformations, cleansing, or enrichment are performed at this stage.

- Schema-on-Read: The schema is not enforced during data ingestion. The structure of the data is discovered and applied when the data is read. This allows for flexibility in handling diverse data sources and evolving schemas.

- Data Retention: Data is typically retained in the Bronze layer for a long period, often indefinitely, to provide a historical record of all ingested data. This enables auditing, data lineage tracking, and the ability to reprocess data if needed.

- Data Sources: Data can come from various sources, including:

- Databases (e.g., transactional systems, operational databases)

- Applications (e.g., CRM, ERP)

- Files (e.g., CSV, JSON, Parquet)

- Streams (e.g., Kafka, Apache Pulsar)

- External APIs

- Storage Format: Common storage formats include:

- Parquet: A columnar storage format that is efficient for analytical queries.

- Avro: A row-based storage format that supports schema evolution.

- JSON: A human-readable format that is suitable for semi-structured data.

- CSV: A simple format for tabular data.

- Data Governance: Basic data governance practices are applied, such as:

- Data lineage tracking: Recording the source and transformations applied to the data.

- Data access control: Restricting access to sensitive data.

- Example Use Cases:

- Storing raw log files from web servers.

- Ingesting data from a CRM system without any transformations.

- Archiving data from a legacy database.

Benefits:

- Data Preservation: Ensures that the original data is always available.

- Flexibility: Accommodates diverse data sources and evolving schemas.

- Auditing: Provides a complete audit trail of all ingested data.

- Reprocessing: Enables the ability to reprocess data with new or updated transformations.

Considerations:

- Storage Costs: Storing large volumes of raw data can be expensive.

- Query Performance: Querying raw data can be slow due to the lack of structure and optimization.

- Data Quality: The Bronze layer contains data with varying levels of quality, which may require further processing before it can be used for analysis.

Silver Layer (Cleaned and Conformed Data) - The Silver layer, also known as the curated or refined zone, builds upon the Bronze layer by applying transformations to clean, conform, and enrich the data. The goal of the Silver layer is to create a consistent and reliable dataset that is suitable for downstream analytics and reporting.

Key Characteristics:

- Cleaned Data: Data is cleansed to remove errors, inconsistencies, and duplicates. This may involve:

- Data type conversions

- Handling missing values

- Standardizing data formats

- Validating data against business rules

- Conformed Data: Data is conformed to a consistent schema and data model. This may involve:

- Mapping data from different sources to a common schema

- Resolving naming conflicts

- Creating surrogate keys

- Enriched Data: Data is enriched with additional information from other sources. This may involve:

- Joining data from multiple tables

- Adding calculated fields

- Looking up data from external APIs

- Schema-on-Write: The schema is enforced during data transformation. This ensures that the data in the Silver layer conforms to a predefined structure.

- Data Quality: Data quality checks are performed to ensure that the data meets predefined standards.

- Data Governance: More advanced data governance practices are applied, such as:

- Data profiling: Analyzing the data to identify patterns and anomalies.

- Data quality monitoring: Tracking data quality metrics over time.

- Data masking: Protecting sensitive data by masking or anonymizing it.

- Storage Format: Common storage formats include:

- Parquet: A columnar storage format that is efficient for analytical queries.

- Delta Lake: An open-source storage layer that provides ACID transactions and schema evolution.

- Example Use Cases:

- Cleaning and conforming customer data from multiple sources.

- Creating a unified view of product data from different systems.

- Enriching sales data with demographic information.

Benefits:

- Improved Data Quality: Provides a clean and consistent dataset for analysis.

- Simplified Data Access: Makes it easier for users to access and query data.

- Enhanced Data Governance: Improves data governance and compliance.

- Increased Trust: Increases trust in the data and its accuracy.

Considerations:

- Transformation Complexity: Data transformation can be complex and time-consuming.

- Data Latency: Data transformation can introduce latency into the data pipeline.

- Storage Costs: Storing transformed data can be expensive.

Gold Layer (Aggregated and Business-Ready Data) - The Gold layer, also known as the business or consumption zone, is the final stage in the Data Medallion architecture. It contains data that has been aggregated, summarized, and transformed into a format that is optimized for specific business use cases. The goal of the Gold layer is to provide users with easy access to high-quality, business-ready data that can be used for reporting, analytics, and decision-making.

Key Characteristics:

- Aggregated Data: Data is aggregated and summarized to provide a high-level view of the business. This may involve:

- Calculating key performance indicators (KPIs)

- Creating summary tables

- Building data cubes

- Business-Ready Data: Data is transformed into a format that is optimized for specific business use cases. This may involve:

- Creating star schemas or snowflake schemas

- Denormalizing data

- Adding business-specific calculations

- Optimized for Query Performance: Data is stored in a format that is optimized for query performance. This may involve:

- Using columnar storage formats

- Creating indexes

- Partitioning data

- Data Governance: Strict data governance practices are applied to ensure data quality and compliance.

- Data Security: Data security measures are implemented to protect sensitive data.

- Storage Format: Common storage formats include:

- Parquet: A columnar storage format that is efficient for analytical queries.

- Delta Lake: An open-source storage layer that provides ACID transactions and schema evolution.

- Data warehouse tables: Formats optimized for specific data warehouse solutions (e.g., Redshift, Snowflake, BigQuery).

- Example Use Cases:

- Creating dashboards and reports for business users.

- Building machine learning models.

- Providing data for ad-hoc analysis.

Benefits:

- Improved Decision-Making: Provides users with the data they need to make informed decisions.

- Increased Efficiency: Reduces the time and effort required to access and analyze data.

- Enhanced Business Agility: Enables businesses to respond quickly to changing market conditions.

- Single Source of Truth: Provides a single source of truth for business data.

Considerations:

- Data Latency: Data transformation can introduce latency into the data pipeline.

- Storage Costs: Storing aggregated data can be expensive.

- Data Governance: Requires strict data governance practices to ensure data quality and compliance.

The Data Medallion architecture provides a structured approach to managing data in a data lake or data warehouse. By organizing data into Bronze, Silver, and Gold layers, organizations can ensure that data is ingested, transformed, and consumed in a consistent and reliable manner. This leads to improved data quality, simplified data access, and enhanced business agility.

The chatbot would interact with a custom domain in the gold layer. There are many technical considerations besides just the data architecture, you might need specific databases for meeting technical/latency requirements while properly representing the different data types, you may need transactional capabilities for tracking and logging the chatbot’s conversation, you would want to plan how to handle all the access control, dependencies and observability of this stack. It can get quite complicated depending on how you approach it. But this is now going into the technical architecture, beyond just the data architecture

Bronze — unaltered data captured from the ERPs, source systems via any mode of the data dumping/ingestions.

Silver — staging data, absolutely not for reporting — transformation begins here, very denormalized

Gold — “Facts” and “Dimensions” — normalized (for analytical data), ready for the semantic model, NO MEASURES, naming conventions for data engineers

Consumption/Platinum — This layer can use bronze, silver or gold depending on the app needs. The semantic models, star schemas, measures get defined here, naming conventions for reports.

No comments:

Post a Comment