Cloud is the fuel that drives today’s digital organisations, where businesses pay only for those selective services or resources that they use over a period of time.

If you are trying to evaluating Databricks (on AWS/Azure) and Snowflake for your company, then some users’ experience and thoughts can be very helpful to answer your questions.

You can consider an example scenario where there are no cluster/SQL warehouses running on both platforms. A data analyst comes in and starts hitting a table with some queries, and this is what happens on both platforms.

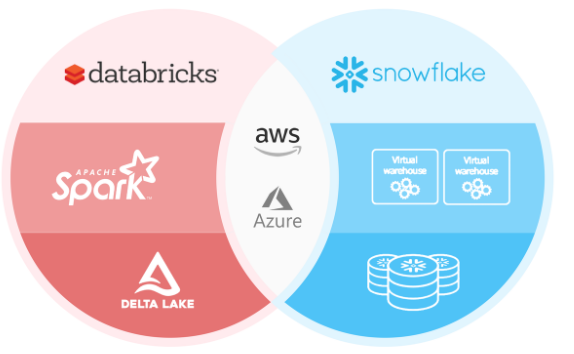

Databricks: It takes 3–4 minutes to spin up the compute cluster/SQL warehouse, and the first query that hits it takes a long time to return some results. Databricks is more open and the types of features they are releasing cover most of the things such as data governance, security , change data capture , AI/ML etc.

Snowflake: The SQL warehouse startup time is within seconds, and even the first query returns results quickly.

We can create cluster pools and keep some clusters idle (warm) in Databricks, which can reduce startup time, but are we not paying more money to keep servers inactive 24/7?

The question is how teams are managing Databricks to be up all the time and at the same time maintain the costs. Doesn’t Snowflake have an edge over this as you are not always keeping the cluster/warehouse active.

One of our main evaluation criteria is user experience (at the same time maintaining low costs). We don’t want people using these platforms to wait for a considerable amount of time to run their queries.

Are there any guidelines for choosing the instance families for the cluster, as there are quite a few of them, and it would be great if you guys could provide some tips on choosing them, as we feel the jobs are running slowly because we are not choosing the right instance family for the cluster? Also, do you guys recommend SQL warehouses, as they spin up quite large machines (which can cost more) even for a smaller warehouse.

- Snowflake clusters run within the snowflake plane, that’s the reason it can repurpose VMs instantaneously for its customers whereas in Databricks, clusters run in the customer plane (customer VPC or VNet), so acquiring a VM and starting the cluster takes time.

- There’s a serverless option in Databricks also, which runs within no time. It’s a new offering where the VMs run in the Databricks plane. Databricks SQL warehouse has simplified cluster sizing similar to snowflake(t-shirt sizing).

Databricks SQL has set a new world record in 100TB TPC-DS, the gold standard performance benchmark for data warehousing. Databricks SQL outperformed the previous record by 2.2x. Unlike most other benchmark results, this one has been formally audited and reviewed by the TPC council.(These results were corroborated by research from the Barcelona Supercomputing Center, which frequently runs benchmarks that are derivatives of TPC-DS on popular data warehouses.)Their latest research benchmarked Databricks and Snowflake, and found that Databricks was 2.7x faster and 12x better in terms of price performance. This result validated the thesis that data warehouses such as Snowflake become prohibitively expensive as data size increases in production.Note: Snowflake often won't do benchmarks on these things anymore-they say that their focus is not on performance in a benchmarking context. The benchmarks that are referenced are standardized. They're not relevant to every use case, but they're not just made up by Databricks.

As far as Snowflake vs Databricks, the biggest difference is that Snowflake stores their data in a proprietary format inside their own servers and uses their own servers for compute costs, so there isn’t that provisioning stage that takes 5 minutes.

Databricks uses mostly open source software and utilizes cloud companies’ compute and storage costs. For instance, Databricks just deploys a root folder onto S3, connects permissions via instance profiles, and requests their EC2 instances for the nodes. You won’t pay AWS for the EC2, but Databricks charges you via DBU (Databricks units) and their business model is that their platform will save you money compared to going to the cloud directly.

With a bunch of cool features that others lack, Snowflake actually helps you manage the costs. Auto-suspend warehouse can be as little as 1 minute, and it can wake up almost instantly, and you can do cool things like having differently sized virtual warehouses for different workloads. Caching technology is awesome and works really well. Multi-clustering on demand works great too. Databricks is ages behind in this regard. They are only now and still testing serverless mode. It is not available in Azure, only in AWS.What kind of user experience do teams who have onboarded Databricks give to their users querying it?

Users can use Databricks and have SQL clusters running with a 30–60 minute timeout, and they run essentially during all business hours, and then they have streaming jobs running 24/7.

What instance family do you use for streaming job clusters?

A user can use a combination of X1000X and X1002T for streaming jobs and run them on non-premium instances to save money.

What size SQL warehouse did you use for SQL endpoints?

For SQL warehouse, you have xl for loading data to cubes and DataScience and xs for low-load development work.

What was the query response time for the SQL endpoints? Did any of your users complain about any slowness or wait time?

Since most users consume data via cubes and PowerBI, it hasn’t been an issue so far, and they perform similarly to the previous SQL server. But if instant response times are crucial, you would consider a proper SQL Server for those.

What’s the cost comparison of this new Databricks offering compared to Snowflake?

The most expensive Databricks is $0.55. Clusters range lower depending on what you choose for resources. Expect cores to be more expensive and memory to be not too bad.

The separation of compute and storage in Snowflake is way more advanced than in Databricks.

- Databricks compute is customer-managed and takes a long time to start-up unless you have EC2 nodes waiting in hot mode, which costs money. Snowflake compute is pretty much serverless and will start in most cases in less than 1 second.

- Databricks compute will not auto-start, which means you have to leave the clusters running to be able to allow users to query DB data. Snowflake compute is fully automated and will auto start in less than a second when a query comes in without any manual effort.

Snowflake can access and write the same data(parquet, CSV, JSON, Orc, Avro ) that is sitting on external blob stores that you manage just like Databricks.

Databricks is a proprietary software layer based on open-source code. This means neither DeltaLake nor any of the DB-specific features will work with anything other than Databricks. So you are locked in to the Databricks software stack for all the workloads. Just because you store the data files on S3 or an Azure blob does not mean you can use them with any other platform at your leisure.

Definitely look at serverless. You can get very similar startup times, and Databricks has made a lot of improvements to Databricks SQL just over the last 12 months. With Databricks, you can also schedule all your production data pipelines, and now you can also call SQL queries from workflows.

I think the Lakehouse concept is going to be the future. Performance is very close on both platforms, and even when something is slower, you are not talking about hours vs minutes.

You get a lot more with Databricks at a lower cost. If you have more predictable workloads, you can also take advantage of reserved compute instances and lower your costs even more. Also, your data is stored in your own account in an open format.

In Snowflake, you want to keep the warehouse open for reporting tools for fast serving and cache reuse, but that costs. Snowflake handled small ad hoc queries from self-service points quite nicely.It really depends how much control you want over your costs and how your contracts are negotiated. Databricks has a lot more customizability and they have some internal libraries that are useful for data engineers. For instance, Mosaic is a really useful geospatial library that was created inside Databricks.

Snowflake is much more intuitive and similar to an SQL client. Snowflake has their own variant of Lakehouse buildout called “SnowPark”. Lakehouse isn’t really particular to Databricks, they just follow their own variant called “Medallion Architecture”

Databricks should have the most optimized libraries for dealing with delta, but I have not encountered a scenario in operational workloads where those optimizations have mattered. That’s because, unlike in the old days when we used highly customized third party drivers to squeeze every bit out of the processing, Delta allows consumers to bring their own compute.In Azure, start-up for Databricks clusters is insane — 15–30 minutes unless you reserve a pool of VMs — this is an instance because AWS Glue can start workers in 5–10 seconds and this has been a feature now for a few years.

Last but not least, you can use any platform you feel is best for the job, but be aware of the maintenance, cost, and performance factors for anything you implement. Snowflake is especially essential for applications involving advanced analytics and data science. Data scientists primarily utilize R and Python to handle large datasets. Databricks provides a platform for integrated data science and advanced analysis, as well as secure connectivity for these domains.

To learn more, please follow us -

http://www.sql-datatools.com

To Learn more, please visit our YouTube channel at —

http://www.youtube.com/c/Sql-datatools

To Learn more, please visit our Instagram account at -

https://www.instagram.com/asp.mukesh/

To Learn more, please visit our twitter account at -

https://twitter.com/macxima

No comments:

Post a Comment