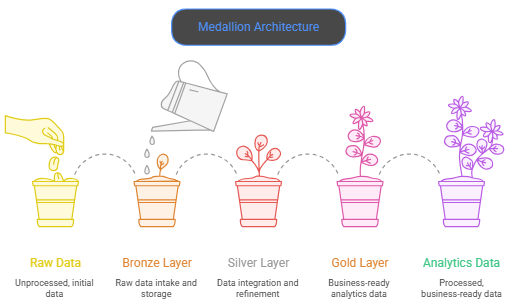

In the late 2010s as a key part of the lakehouse paradigm, Databricks introduced the Medallion Architecture which is a tiered way to organize data (Bronze for raw intake, Silver for refined integration, and Gold for business-ready analytics).

By 2025, with improvements like better Delta Live Tables (DLT) and Unity Catalog, it’s not only possible; it’s a game-changer for businesses that deal with petabyte-scale data.

The Medallion Architecture design pattern fixes the problems with traditional data lakes by requiring incremental refinement. This makes it possible to build scalable ELT (Extract, Load, Transform) pipelines that can handle everything from real-time streaming to AI/ML operations.

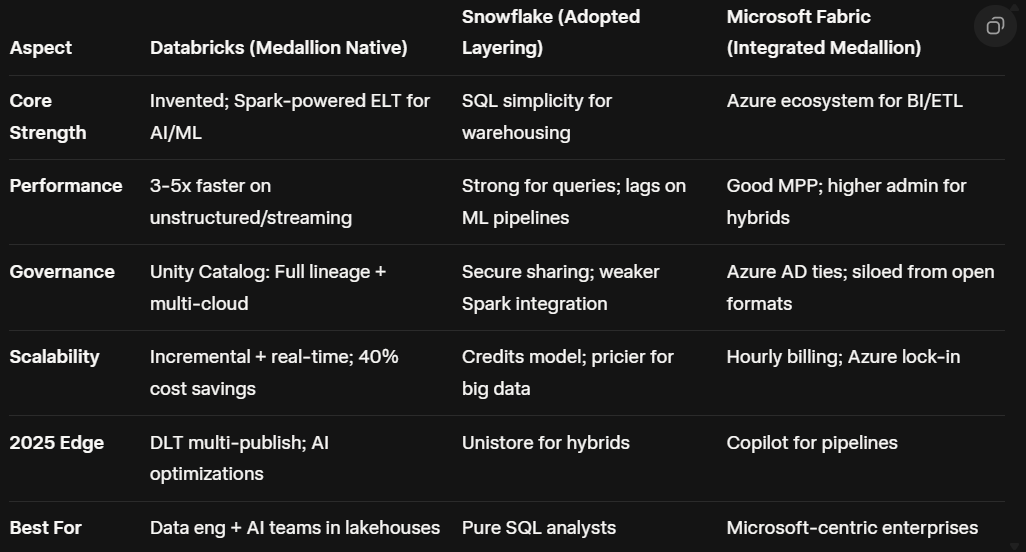

While competitors like Snowflake and Azure Synapse have adopted similar concepts, Databricks remains the gold standard (pun intended) due to its native origins, seamless tooling, and unmatched ecosystem for modern data teams.

Databricks wins for holistic Medallion due to its lakehouse foundation — Snowflake is a warehouse champ but lacks Spark’s breadth, while Synapse ties you to Azure and demands more tuning.

✅Native Invention and Deepest Integration

- Databricks created Medallion, so it’s woven into the platform’s DNA. This implies no onerous retrofits. Bronze layers put raw data straight into Delta Lake tables with ACID guarantees and time travel for audits. Silver and Gold build up over time without moving data..

- Lakeflow Declarative Pipelines: Define Medallion flows in SQL or Python with minimal code; auto-handle dependencies, retries, and quality checks. Recent 2025 updates allow publishing to multiple catalogs/schemas from one DLT pipeline, streamlining governance across teams.

- Result: Teams deploy end-to-end pipelines 5–10x faster than custom Spark jobs, reducing boilerplate and errors.

✅Superior Performance and Scalability

- Built on Spark’s distributed engine, Databricks handles hybrid batch/streaming workloads effortlessly — e.g., Bronze ingests Kafka streams in real-time, Silver applies schema enforcement via materialized views, and Gold optimizes for BI queries with Z-ordering and liquid clustering.

- Incremental Processing: Only transform changed data, slashing compute costs by 40–60% and enabling sub-second latencies for Gold-layer dashboards. Features like Photon (vectorized execution) deliver 2–4x speedups on Medallion queries vs. legacy warehouses.

- In 2025 benchmarks, Databricks outperforms on unstructured data (common in Bronze), making it ideal for AI pipelines where Silver feeds feature stores directly.

✅ Robust Governance and Data Quality

- Unity Catalog: Centralizes metadata across layers, enforcing row/column-level security, lineage tracking, and compliance (e.g., GDPR audits via Bronze timestamps). This turns Medallion into a “single source of truth” without silos.

- Built-in quality gates (e.g., Great Expectations integration) validate Silver data progressively, catching issues early. For security-focused setups, it supports quantum-resistant encryption and fine-grained access — vital as threats evolve.

- Adoption: Over 10,000 organizations (including 60% of Forbes Global 2000) use it for Medallion, with case studies showing 70% faster ML model training from Gold layers.

✅Unified Analytics for Data + AI Teams

- Unlike siloed tools, Databricks collapses the stack: One platform for engineering (DLT pipelines), science (MLflow for Gold-derived models), and analysts (Databricks SQL for ad-hoc queries). This fosters collaboration, cutting handoffs by 50%.

- Extensibility: Integrates natively with dbt, Airflow, and BI tools (Tableau, Power BI), plus multi-cloud support (AWS, Azure, GCP) for hybrid Medallions.

Understanding the Medallion Architecture

The Medallion Architecture is a data design pattern used to logically organize data in a lakehouse, with the goal of incrementally and progressively improving the structure and quality of data as it flows through each layer.

It consists of three main layers:

- Bronze Layer (Raw Data): This layer stores the raw, unprocessed data ingested from various sources. Data in this layer is typically stored in its original format, preserving its fidelity. The primary purpose of the Bronze layer is to provide an immutable archive of the source data.

- Silver Layer (Validated and Enriched Data): This layer contains data that has been cleaned, transformed, and enriched. Data quality checks, deduplication, and standardization are performed in this layer. The Silver layer provides a consistent and reliable view of the data, ready for downstream analytics and reporting.

- Gold Layer (Aggregated and Business-Ready Data): This layer contains data that has been aggregated, summarized, and transformed to meet specific business requirements. Data in the Gold layer is typically optimized for specific use cases, such as reporting, dashboards, and machine learning.

⚙️Databricks: A Natural Fit for Medallion Architecture

Databricks offers a comprehensive suite of tools and services that seamlessly support the implementation of the Medallion Architecture. Here’s why it stands out:

🔏 Unified Platform for Data Engineering and Data Science

Databricks provides a unified platform that caters to both data engineers and data scientists. This eliminates the need for separate tools and infrastructure for data processing and analytics, streamlining the entire data lifecycle.

- Spark as the Core Engine: Databricks is built on Apache Spark, a powerful and scalable distributed processing engine. Spark’s ability to handle large volumes of data efficiently makes it ideal for processing data in all three layers of the Medallion Architecture.

- SQL Analytics: Databricks SQL allows data analysts and business users to query data directly from the lakehouse using standard SQL. This enables self-service analytics and reduces the reliance on data engineering teams for ad-hoc reporting.

- Machine Learning Capabilities: Databricks provides a comprehensive set of tools for building and deploying machine learning models, including MLflow for model management and tracking. This allows data scientists to leverage the data in the Gold layer to build predictive models and gain insights.

🔏 Delta Lake: Reliable and Performant Data Lakehouse

Delta Lake is an open-source storage layer that brings ACID transactions, schema enforcement, and data versioning to data lakes. It is deeply integrated with Databricks and is a crucial component for building a reliable and performant Medallion Architecture.

- ACID Transactions: Delta Lake ensures data consistency and reliability by providing ACID (Atomicity, Consistency, Isolation, Durability) transactions. This prevents data corruption and ensures that data is always in a consistent state, even in the face of concurrent writes and failures.

- Schema Enforcement and Evolution: Delta Lake enforces schema on write, ensuring that only data that conforms to the defined schema is written to the lakehouse. It also supports schema evolution, allowing you to update the schema of your data over time without breaking existing pipelines.

- Time Travel: Delta Lake’s time travel feature allows you to query previous versions of your data. This is useful for auditing, debugging, and reproducing past results.

- Optimized Performance: Delta Lake provides several performance optimizations, such as data skipping, caching, and indexing, which significantly improve query performance.

🔏 Delta Live Tables (DLT): Simplified ETL Pipeline Development

Delta Live Tables (DLT) is a declarative framework for building and managing ETL pipelines. It simplifies the development and deployment of data pipelines by automatically handling tasks such as data quality monitoring, error handling, and infrastructure management.

- Declarative Pipeline Definition: DLT allows you to define your data pipelines using a simple, declarative syntax. This makes it easier to understand and maintain your pipelines.

- Automatic Data Quality Monitoring: DLT automatically monitors the quality of your data and provides alerts when data quality issues are detected. This helps you to identify and resolve data quality problems early on.

- Automatic Error Handling: DLT automatically handles errors that occur during pipeline execution. This prevents errors from cascading through your pipelines and ensures that your data is always consistent.

- Automatic Infrastructure Management: DLT automatically manages the underlying infrastructure required to run your pipelines. This eliminates the need for you to manually provision and configure resources.

🔏 Collaboration and Governance

Databricks provides features that facilitate collaboration and governance, which are essential for managing data in a Medallion Architecture.

- Shared Workspaces: Databricks allows you to create shared workspaces where data engineers, data scientists, and business users can collaborate on data projects.

- Access Control: Databricks provides granular access control features that allow you to control who can access your data and what they can do with it.

- Auditing and Monitoring: Databricks provides auditing and monitoring features that allow you to track data lineage and monitor the performance of your pipelines.

🔏 Scalability and Cost-Effectiveness

Databricks is designed to scale to handle large volumes of data and complex workloads. Its cloud-native architecture allows you to easily scale your resources up or down as needed, ensuring that you only pay for what you use.

- Auto-Scaling Clusters: Databricks automatically scales your clusters up or down based on the workload. This ensures that you have the resources you need to process your data efficiently.

- Spot Instances: Databricks supports the use of spot instances, which can significantly reduce the cost of running your data pipelines.

✍️Advantages over Alternative Solutions

While other platforms can be used to implement the Medallion Architecture, Databricks offers several advantages:

- Tight Integration: Databricks provides a tightly integrated platform that is specifically designed for building and managing data lakehouses. This eliminates the need to integrate disparate tools and services.

- Simplified Development: Databricks simplifies the development of data pipelines with features like Delta Live Tables and SQL Analytics.

- Optimized Performance: Databricks provides several performance optimizations that significantly improve query performance.

- Collaboration and Governance: Databricks provides features that facilitate collaboration and governance, which are essential for managing data in a Medallion Architecture.

✍️Head-to-Head: Databricks vs. Competitors in 2025 — These days, every service provider uses patterns like Medallion’s, but Databricks’ lakehouse-native execution stands out.

Databricks leads in holistic adoption, especially for ELT over rigid ETL.

✍️Caveats and Alternatives

It’s not one-size-fits-all: For lightweight SQL needs, Snowflake’s ease wins; Azure shops may prefer Fabric’s seamlessness. Databricks’ Spark curve can intimidate beginners, and costs rise without optimization. Still, for Medallion’s full potential — turning raw data into AI gold — it’s the premier choice, as echoed in September 2025 whitepapers.

Conclusion

Databricks stands out as the premier provider for Medallion Architecture due to its unified platform, Delta Lake’s reliability and performance, Delta Live Tables’ simplified ETL development, robust collaboration and governance features, and scalability and cost-effectiveness.

By leveraging these capabilities, organizations can build and manage data pipelines that deliver high-quality, business-ready data for analytics and machine learning. Its comprehensive suite of tools and services makes it the ideal choice for organizations looking to build a modern data lakehouse based on the Medallion Architecture.

No comments:

Post a Comment