In an era where artificial intelligence is reshaping enterprise operations, Microsoft has positioned SQL Server 2025 as a cornerstone of its AI strategy by introducing Flexible AI Model Management — a feature that fundamentally reimagines how databases interact with machine learning ecosystems. This capability, which allows for the declarative registration, management, and invocation of external AI models via T-SQL, is not merely an incremental update but a deliberate evolution to address the explosive growth of AI-driven applications.

As databases transition from passive data stores to active intelligence hubs, Microsoft’s rationale centers on bridging the gap between structured data management and dynamic AI workflows, ensuring organizations can harness AI securely, scalable, and without the friction of silos or vendor lock-in.

- Key Driver: Pace of AI Advancements: With over 3,400 organizations applying for SQL Server 2025’s private preview — adoption twice as fast as SQL Server 2022 — Microsoft is capitalizing on the “AI frontier” where models outstrip infrastructure. This feature abstracts REST inference endpoints into database-native objects (via CREATE EXTERNAL MODEL), allowing instant swaps between providers like Azure OpenAI or Ollama, ensuring SQL Server remains a future-proof platform for embedding tasks and vector creation.

- Quote from Microsoft: “For over 35 years, SQL Server has been an industry leader in providing secure, high-performance data management.” By extending this legacy to AI, the feature empowers DBAs and developers to operationalize models without custom APIs, reducing deployment time from weeks to hours.

This motivation aligns with broader industry trends, as per Microsoft’s Work Trend Index, where “frontier firms” leverage AI agents with organization-wide context — necessitating databases that natively ingest and query embeddings.

2. Empowering Developers: Breaking Down Data Silos for Frictionless AI Workflows

A primary “why” is to democratize AI for every developer, eliminating the barriers that silo operational data from analytical AI processes. Flexible AI Model Management provides a T-SQL-first interface for model lifecycle tasks — registration, alteration, and invocation — integrated with functions like AI_GENERATE_EMBEDDINGS and AI_GENERATE_CHUNKS. This declarative approach lets users generate vectors from text inputs directly in queries, feeding them into vector indexes for semantic search or RAG pipelines, all while supporting frameworks like LangChain and Semantic Kernel.

- Developer-Centric Benefits: It transforms SQL Server into a “vector database in its own right,” with built-in filtering and simplified embedding workflows. Developers can now build AI apps “from ground to cloud” without exporting data, fostering innovation in scenarios like real-time fraud detection or personalized recommendations.

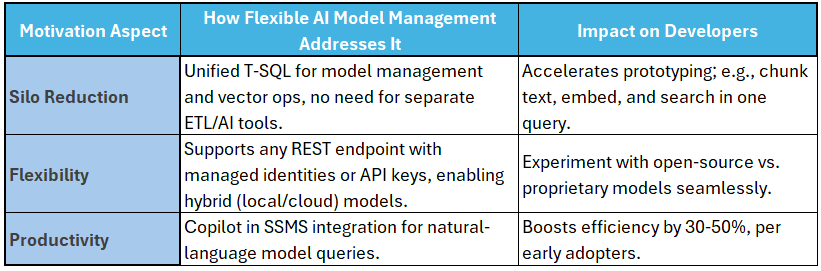

Motivation AspectHow Flexible AI Model Management Addresses ItImpact on DevelopersSilo ReductionUnified T-SQL for model management and vector ops, no need for separate ETL/AI tools.Accelerates prototyping; e.g., chunk text, embed, and search in one query.FlexibilitySupports any REST endpoint with managed identities or API keys, enabling hybrid (local/cloud) models.Experiment with open-source vs. proprietary models seamlessly.ProductivityCopilot in SSMS integration for natural-language model queries.Boosts efficiency by 30–50%, per early adopters.

- Strategic Vision: Microsoft aims to “empower every developer on the planet to do more with data,” converging structured, unstructured, transactional, and operational data into AI agents. This feature is pivotal in that convergence, as it allows real-time replication to Fabric for analytics, ensuring AI insights draw from fresh, governed data.

Customer stories underscore this: Organizations like The ODP Corporation use similar Azure integrations to cut HR data processing from 24 hours to real-time, illustrating how model management glues backends to AI fronts.

3. Prioritizing Security, Scalability, and Enterprise Readiness

Security isn’t an afterthought — it’s baked in. With data breaches costing millions, Microsoft introduces this feature to keep AI operations within the database’s fortified perimeter, using encrypted credentials and isolated sessions. Models invoke via secure HTTPS, with no data leakage, aligning with zero-trust principles and compliance like GDPR.

- Scalability Rationale: As AI workloads scale to billions of inferences, the feature leverages SQL Server’s parallelism for batch embeddings and DiskANN indexing, delivering sub-second responses on terabyte datasets. This is crucial for enterprises managing hybrid estates, where Azure Arc extends cloud governance to on-premises SQL.

- Business Imperative: In a post-2025 landscape, where AI agents automate 40% of knowledge work (per Microsoft studies), databases must evolve to fuel this without performance bottlenecks. Flexible AI Model Management ensures SQL Server 2025 supports “secure, high-performance data management” for AI, transforming it from a transactional engine into a competitive AI enabler.

Microsoft’s Broader Ecosystem Play: Fabric, Azure, and Beyond

This feature isn’t isolated — it’s woven into Microsoft’s “converged ecosystem,” mirroring data to Fabric’s OneLake for zero-ETL analytics and integrating with Azure AI Foundry for model routing. The “why” extends to ecosystem lock-in avoidance: By supporting diverse endpoints, Microsoft invites multi-cloud AI while steering toward its stack, evidenced by rapid preview uptake.

In summary, Microsoft provides Flexible AI Model Management to propel SQL Server into the AI era — fueling innovation, securing data sovereignty, and empowering seamless integration that turns databases into AI accelerators. As one preview note encapsulates: SQL Server 2025 “builds on previous releases to grow [it] as a platform that gives you choices,” ensuring AI isn’t a bolt-on but the new baseline.

Great article! I’ve been exploring different ways to learn machine learning online, and your post gave me some really useful insights. Thanks for sharing such clear and practical information.

ReplyDelete